info

In this post I'm going to explain how I migrate the Control Tower AFT CodePipelines to GitHub workflows.

wait, why?

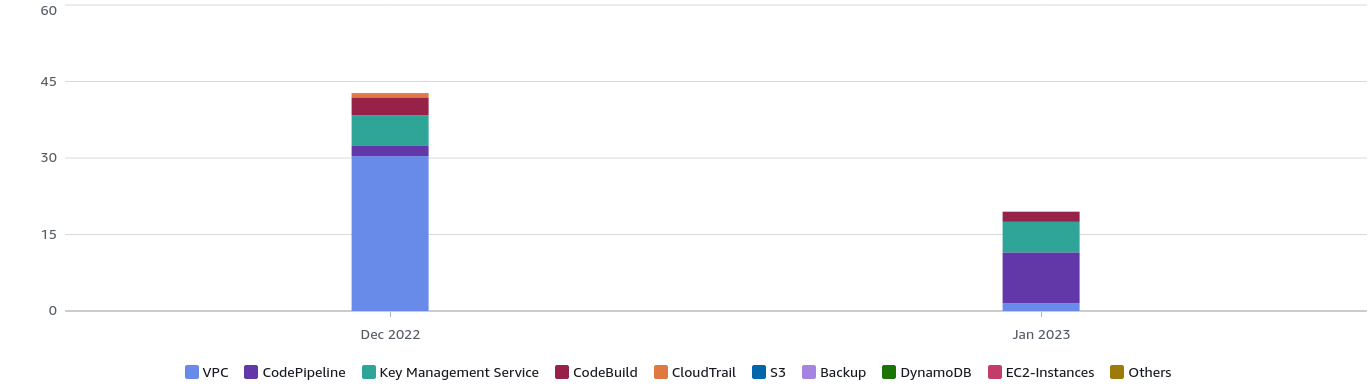

Simply, saving costs. Next are the monthly costs for updating 12 AWS accounts.

| Service | Service total | December 2022 | January 2023 |

|---|---|---|---|

| VPC costs | $31.86 | $30.36 | $1.50 |

| CodePipeline costs | $12.00 | $2.00 | $10.00 |

| Key Management Service costs | $11.98 | $5.99 | $5.99 |

| CodeBuild costs | $5.49 | $3.54 | $1.95 |

- VPC Costs: I described in my previous post how to reduce it through a custom flag.

- Code Pipeline and Codebuild costs: The main cost here is related to pipelines executions. We will dive into in the next section.

- KMS: Control Tower for AFT Cost for using the secrets created with Control Tower AFT

Pipelines

Account customization has two type:

- aft-global-customization in the repository learn-terraform-aft-account-customizations

- aft-account-customization in the repository learn-terraform-aft-global-customizations

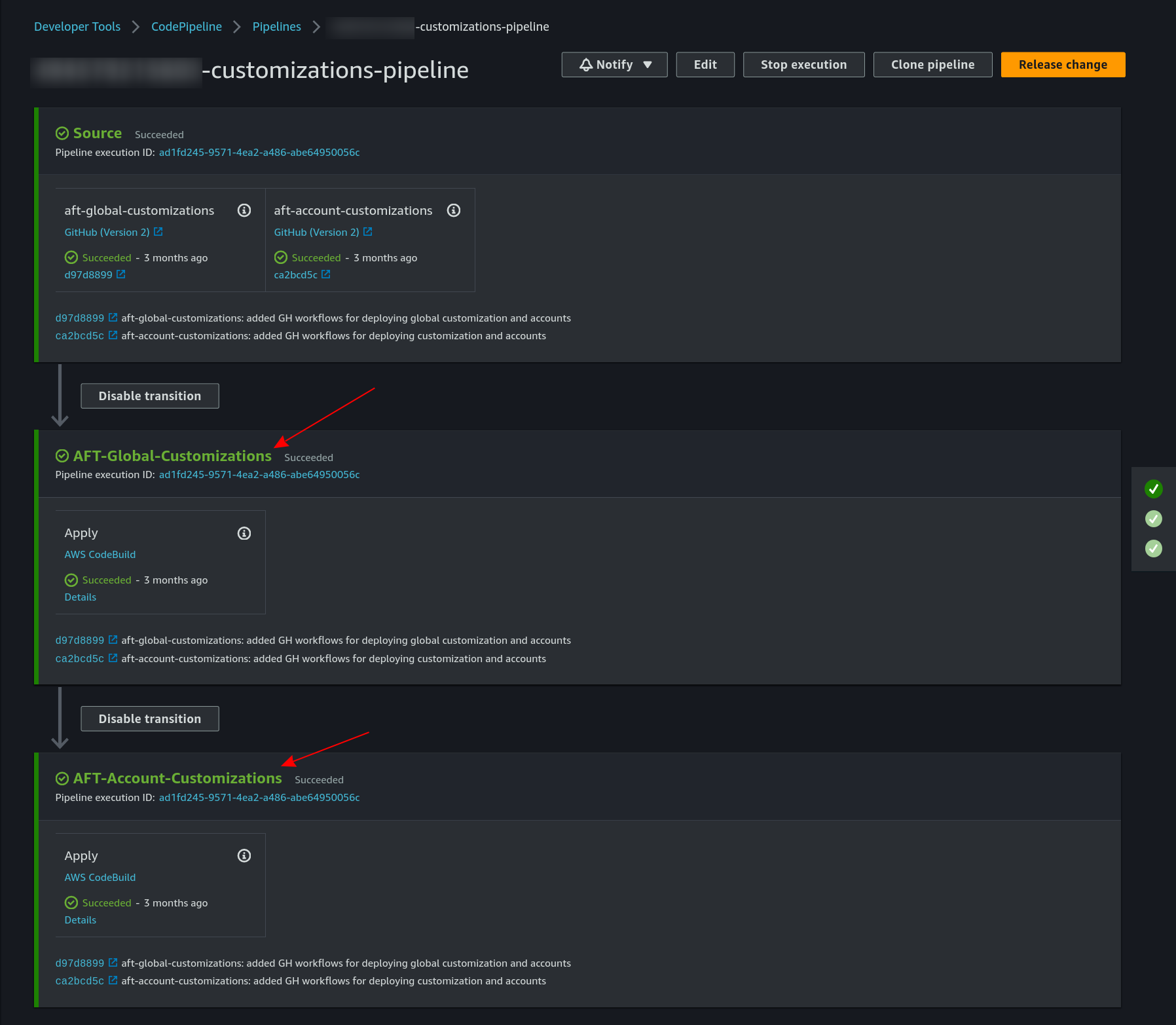

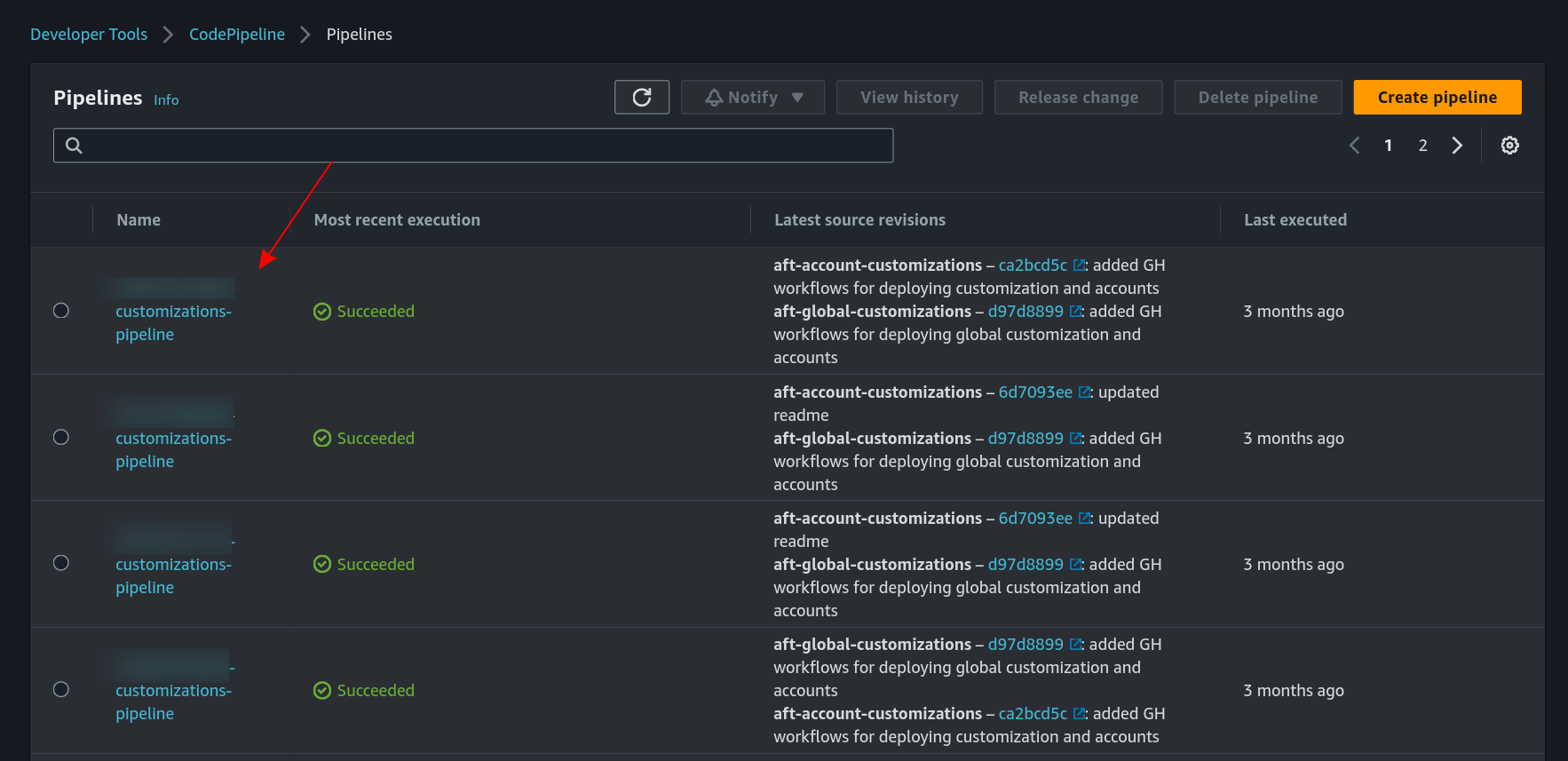

Both are in the same CodePipeline model {ACCOUNT_ID}-customizations-pipeline:

There is one pipeline replica for each AWS account:

So, for updating all the accounts, all the pipelines should be executed.

Migrating to GH workflows

Let me first set the scope: I want to reduce the CodePipeline cost by executing GH workflows instead.

My initial approach is to create GH workflows in the global-customization and account-customization repositories to apply customizations to any account. This will work for created account, but if I create a new one that will still go through the CodePipeline.

Okey, let's start:

First, let me list the pipelines specs:

Both share a common structure:

- install: setup the environment variables for the execution and install the dependencies

- pre_build: execute a python script

- build:

- build

backend.tfandaft-providers.tffrom the templatesbackend.jinjaandaft-providers.jinja. jinja is language that combined with python simplify files templates filling, in this case, help us build those terraform files dynamically for each account. - terraform init

- terraform apply

- build

- post_build: execute a python script, useful for a post clean up actions

In order to keep it simple, I'm going to skip the pre_build and post_build part. Let's start:

First, I want to map all the accounts detail into JSON files as:

accounts/arepabank-dev.json

{

"account_id": "114628476372",

"env": "dev"

}

If we list those files and provide the to the strategy.matrix we can update multiple accounts at the same time.

Next is the main workflow code:

on:

workflow_call:

inputs:

accounts:

required: true

type: string

apply:

required: false

type: boolean

default: false

env:

# use GH secrets to store sensitive credentials

AFT_ACCOUNT_ID: ${{ secrets.AFT_ACCOUNT_ID }}

AWS_ACCESS_KEY_ID: ${{ secrets.AFT_ROOT_EXECUTOR_AK }}

AWS_SECRET_ACCESS_KEY: ${{ secrets.AFT_ROOT_EXECUTOR_SK }}

AWS_DEFAULT_REGION: ${{ secrets.AFT_ROOT_EXECUTOR_REGION }}

TF_STATE_BACKEND_S3_KMS_KEY_ID: ${{ secrets.TF_STATE_S3_BUCKET_KMS_ID }}

jobs:

deployment:

name: ${{ matrix.account }}

runs-on: ubuntu-latest

environment: ${{ inputs.apply == true && matrix.account }}

strategy:

fail-fast: false

matrix:

account: ${{ fromJSON(inputs.accounts) }} # accounts is an array with JSON files names

steps:

- name: Checkout

uses: actions/checkout@v3

- name: setup account properties

env:

ACCOUNT_FILE: "accounts/${{ matrix.account }}.json"

run: |

ACCOUNT_ID=$(jq '.account_id' $ACCOUNT_FILE -r)

ACCOUNT_ENV=$(jq '.env' $ACCOUNT_FILE -r)

# mapping ENV VARS from CodePipeline spec

echo "EXECUTION_TIMESTAMP=$(date '+%Y-%m-%d %H:%M:%S')" >> $GITHUB_ENV

echo "TF_DISTRIBUTION_TYPE=oss" >> $GITHUB_ENV

echo "AWS_BACKEND_REGION=$AWS_DEFAULT_REGION" >> $GITHUB_ENV

echo "AWS_BACKEND_BUCKET=aft-backend-$AFT_ACCOUNT_ID-primary-region" >> $GITHUB_ENV

echo "AWS_BACKEND_KEY=$ACCOUNT_ID-aft-global-customizations/terraform.tfstate" >> $GITHUB_ENV

echo "AWS_BACKEND_DYNAMODB_TABLE=aft-backend-$AFT_ACCOUNT_ID" >> $GITHUB_ENV

echo "AWS_BACKEND_KMS_KEY_ID=$TF_STATE_BACKEND_S3_KMS_KEY_ID" >> $GITHUB_ENV

# BE SURE credentials have permission on the bucket

echo "AWS_BACKEND_ROLE_ARN=" >> $GITHUB_ENV

# BE SURE credentials can assume this role / or create a role

echo "AWS_PROVIDER_ROLE_ARN=arn:aws:iam::$ACCOUNT_ID:role/AWSAFTExecution" >> $GITHUB_ENV

# file paths

echo "AWS_BACKEND_PATH=terraform/backend.jinja" >> $GITHUB_ENV

echo "AWS_PROVIDER_PATH=terraform/aft-providers.jinja" >> $GITHUB_ENV

- name: build aft-providers.tf and backend.tf

run: |

pip install Jinja2

./scripts/aft-providers-builder.py

cat "terraform/aft-providers.tf"

./scripts/backend-builder.py

cat "terraform/backend.tf"

- name: Terraform Plan

id: tf-plan

working-directory: terraform

run: |

TF_PLAN_PATH="$GITHUB_WORKSPACE/accounts/${{ matrix.account }}.tfplan"

echo "TF_PLAN_PATH=$TF_PLAN_PATH" >> $GITHUB_ENV

terraform init

terraform plan -out=$TF_PLAN_PATH

- name: terraform apply

if: ${{ inputs.apply == true }}

working-directory: terraform

run: |

terraform apply $TF_PLAN_PATH

The python code to build the .jinja file is:

#!/usr/bin/python3

import os

from jinja2 import Environment, FileSystemLoader

backend_path=os.getenv('AWS_BACKEND_PATH')

timestamp=os.getenv('EXECUTION_TIMESTAMP')

tf_distribution_type=os.getenv('TF_DISTRIBUTION_TYPE')

backend_region=os.getenv('AWS_BACKEND_REGION')

bucket=os.getenv('AWS_BACKEND_BUCKET')

key=os.getenv('AWS_BACKEND_KEY')

dynamodb_table=os.getenv('AWS_BACKEND_DYNAMODB_TABLE')

kms_key_id=os.getenv('AWS_BACKEND_KMS_KEY_ID')

aft_admin_role_arn=os.getenv('AWS_BACKEND_ROLE_ARN')

file_loader = FileSystemLoader('.')

env = Environment(loader=file_loader)

template = env.get_template(backend_path)

output_content = template.render(

timestamp=timestamp,

tf_distribution_type=tf_distribution_type,

region=backend_region,

bucket=bucket,

key=key,

dynamodb_table=dynamodb_table,

kms_key_id=kms_key_id,

aft_admin_role_arn=aft_admin_role_arn

)

output_filename = backend_path.replace(".jinja",".tf")

# to save the results

with open(output_filename, "w") as fh:

fh.write(output_content)

You can check the other workflows and scripts to see how I integrated this in a PR workflow:

- 1-pr.yml

- 2-deploy-main.yml

- shared-tf-plan.yml

- shared-get-workspaces.yml

- backend-builder.py

- aft-providers-builder.py

About me

I'm a Software Engineer with experience as Developer and DevOps. The technologies I have worked with are DotNet, Terraform and AWS. For the last one, I have the Developer Associate certification. I define myself as a challenge-seeker person and team player. I simply give it all to deliver high-quality solutions. On the other hand, I like to analyze and improve processes, promote productivity and document implementations (yes, I'm a developer that likes to document 🧑💻).

You can check my experience here.

Personal Blog - cangulo.github.io

GitHub - Carlos Angulo Mascarell - cangulo

LinkedIn - Carlos Angulo Mascarell

Twitter - @AnguloMascarell